I wrote about the exploration on Natural language querying for wikipedia in previous three blog posts.

In Part 1, I was suggesting that building such a collection of question and answers can help natural language answering. One missing piece was actually suggesting an answer for a new question that is not part of QA set for article.

In Part 2, I tried using distilbert-base-cased-distilled-squad with ONNX optimization to answer the questions.

[Read More]

Natural language question answering in Wikipedia - an exploration - Part 3

I wrote about the exploration on Natural language querying for wikipedia in previous two blog posts.

In Part 1, I was suggesting that building such a collection of question and answers can help natural language answering. One missing piece was actually suggesting an answer for a new question that is not part of QA set for article.

In Part 2, I tried using distilbert-base-cased-distilled-squad with ONNX optimization to answer the questions.

[Read More]

Natural language question answering in Wikipedia - an exploration - Part2

A few days back I posted an experiment on Natural language querying for wikipedia by generating questions and answers. I was suggesting that building such a collection of question and answers can help natural language answering. One missing piece was actually suggesting an answer for a new question that is not part of QA set for article.

As a continuation of that experiment, I was exploring various options for answering questions.

[Read More]

Natural language question answering in Wikipedia - an exploration

In this blog post I explain the prospects of providing questions and answers as an additional content format in wikipedia and a human-in-the-loop approach for that with a prototype.

Introduction Wikipedia is a hub for curiosity, with people visiting the site in search of answers to their questions. However, they typically arrive at Wikipedia via intermediaries such as search engines, which direct them to the relevant article. While Wikipedia’s keyword-based search function can be helpful, it may not be sufficient for addressing more complex natural language queries.

[Read More]

One million Wikipedia articles by translation

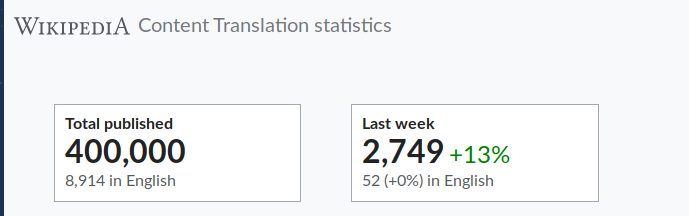

I am happy to share a news from my work at Wikimedia Foundation. The Wikipedia article translation system, known as Content Translation reached a milestone of creating one million articles. Since 2015, this is my major project at WMF and I am lead engineer for the project. The Content Translation system helps Wikipedia editors to quickly translate and publish articles from one language wiki to another. This way, the knowledge gap between different languages are reduced.

[Read More]

വിക്കിപീഡിയയ്ക്ക് പതിനെട്ട്. നാലുലക്ഷം തർജ്ജമകളും

വിക്കിപീഡിയയുടെ പതിനെട്ടാം പിറന്നാളാണിന്ന്. അമ്പത്തെട്ടുലക്ഷം ലേഖനങ്ങളോടെ ഇംഗ്ലീഷ് വിക്കിപീഡിയയും അറുപതിനായിരത്തോളം ലേഖനങ്ങളോടെ മലയാളം വിക്കിപീഡിയയും ഒരുപാടു പരിമിതികൾക്കും വെല്ലുവിളികൾക്കുമിടയിൽ യാത്ര തുടരുന്നു.

292 ഭാഷകളിൽ വിക്കിപീഡിയ ഉണ്ടെങ്കിലും ഉള്ളടക്കത്തിന്റെ അനുപാതം ഒരുപോലെയല്ല. വിക്കിമീഡിയ ഫൗണ്ടേഷനിൽ കഴിഞ്ഞ നാലുവർഷമായി എന്റെ പ്രധാനജോലി ഭാഷകൾ തമ്മിൽ മെഷീൻ ട്രാൻസ്ലേഷന്റെയും മറ്റും സഹായത്തോടെ ലേഖനങ്ങൾ പരിഭാഷപ്പെടുത്തുന്ന സംവിധാനത്തിന്റെ സാങ്കേതികവിദ്യയ്ക്ക് നേതൃത്വം കൊടുക്കലായിരുന്നു.

ഇന്നലെ ഈ സംവിധാനത്തിന്റെ സഹായത്തോടെ പുതുതായി കൂട്ടിച്ചേർത്ത ലേഖനങ്ങളുടെ എണ്ണം നാലുലക്ഷമായി.

A short story of one lakh Wikipedia articles

At Wikimedia Foundation, I am working on a project to help people translate articles from one language to another. The project started in 2014 and went to production in 2015.

Over the last one year, a total of 100,000 new artcles were created across many languages. A new article get translated in every five minutes, 2000+ articles translated per week.

The 100000th Wikipedia page created with Content Translation is in Spanish, for the song ‘Crying, Waiting, Hoping’

[Read More]

Translating HTML content using a plain text supporting machine translation engine

At Wikimedia, I am currently working on ContentTranslation tool, a machine aided translation system to help translating articles from one language to another. The tool is deployed in several wikipedias now and people are creating new articles sucessfully.

The ContentTranslation tool provides machine translation as one of the translation tool, so that editors can use it as an initial version to improve up on. We used Apertium as machine translation backend and planning to support more machine translation services soon.

[Read More]

Talk at Wikimania 2014

I presented the Content Translation project of my team at Wikimania 2014 at London. Here is the video of the presentation.

Parsing CLDR plural rules in javascript

English and many other languages have only 2 plural forms. Singular if the count is one and anything else is plural including zero.

But for some other languages, the plural forms are more than 2. Arabic, for example has 6 plural forms, sometimes referred as ‘zero’, ‘one’, ‘two’, ‘few’, ‘many’, ‘other’ forms. Integers 11-26, 111, 1011 are of ‘many’ form, while 3,4,..10 are ‘few’ form.

While preparing the interface messages for application user interfaces, grammatically correct sentences are must.

[Read More]